|

GHOST

1.1.2

General, Hybrid, and Optimized Sparse Toolkit

|

|

GHOST

1.1.2

General, Hybrid, and Optimized Sparse Toolkit

|

Numerical operations which involve communication. More...

|

Functions | |

| ghost_error | ghost_dot (void *res, ghost_densemat *vec1, ghost_densemat *vec2) |

| Compute the global dot product of two dense vectors/matrices. More... | |

| ghost_error | ghost_spmv (ghost_densemat *res, ghost_sparsemat *mat, ghost_densemat *invec, ghost_spmv_opts opts) |

| Multiply a sparse matrix with a dense vector. More... | |

| ghost_error | ghost_gemm (ghost_densemat *x, ghost_densemat *v, const char *transv, ghost_densemat *w, const char *transw, void *alpha, void *beta, int reduce, ghost_gemm_flags flags) |

| Compute the general (dense) matrix-matrix product x = v*w. More... | |

| ghost_error | ghost_normalize (ghost_densemat *x) |

| Normalizes a densemat (interpreted as a block vector). More... | |

| ghost_error | ghost_tsmtspmtsm (ghost_densemat *x, ghost_densemat *v, ghost_densemat *w, ghost_sparsemat *A, void *alpha, void *beta, int reduce) |

| Multiply a transposed distributed dense tall skinny matrix with a sparse matrix and another distributed dense tall skinny matrix and Allreduce the result. More... | |

| ghost_error | ghost_tsmttsm (ghost_densemat *x, ghost_densemat *v, ghost_densemat *w, void *alpha, void *beta, int reduce, int conjv, ghost_gemm_flags flags) |

| Multiply a transposed distributed dense tall skinny matrix with another distributed dense tall skinny matrix and Allreduce the result. More... | |

Numerical operations which involve communication.

These operations usually call one or more local operations (cf. Local operations) together with communication calls.

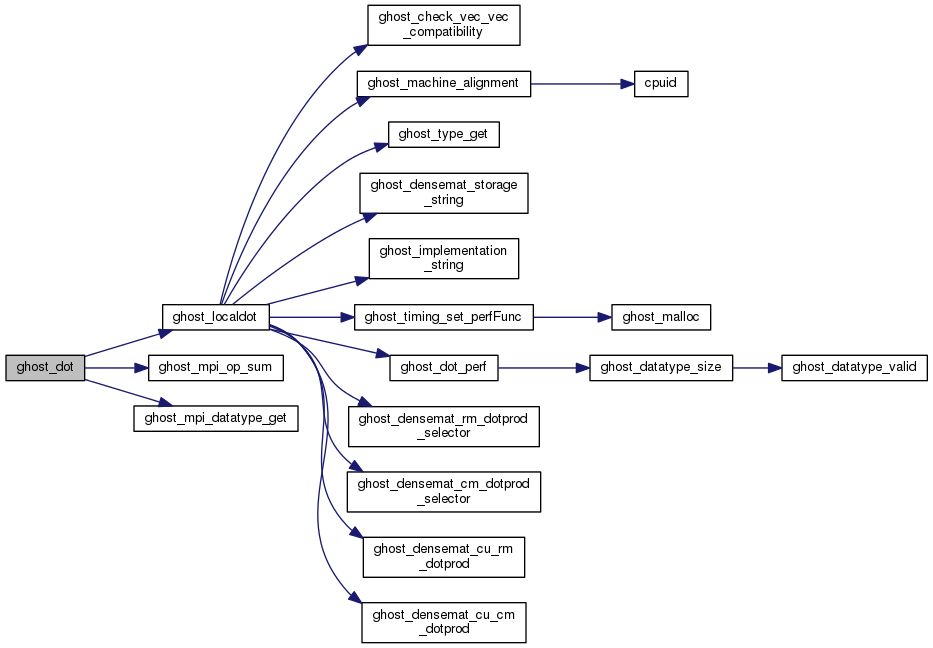

| ghost_error ghost_dot | ( | void * | res, |

| ghost_densemat * | vec1, | ||

| ghost_densemat * | vec2 | ||

| ) |

Compute the global dot product of two dense vectors/matrices.

| res | Where to store the result. |

| vec1 | The first vector/matrix. |

| vec2 | The second vector/matrix. |

This function first computes the local dot product and then performs an allreduce on the result.

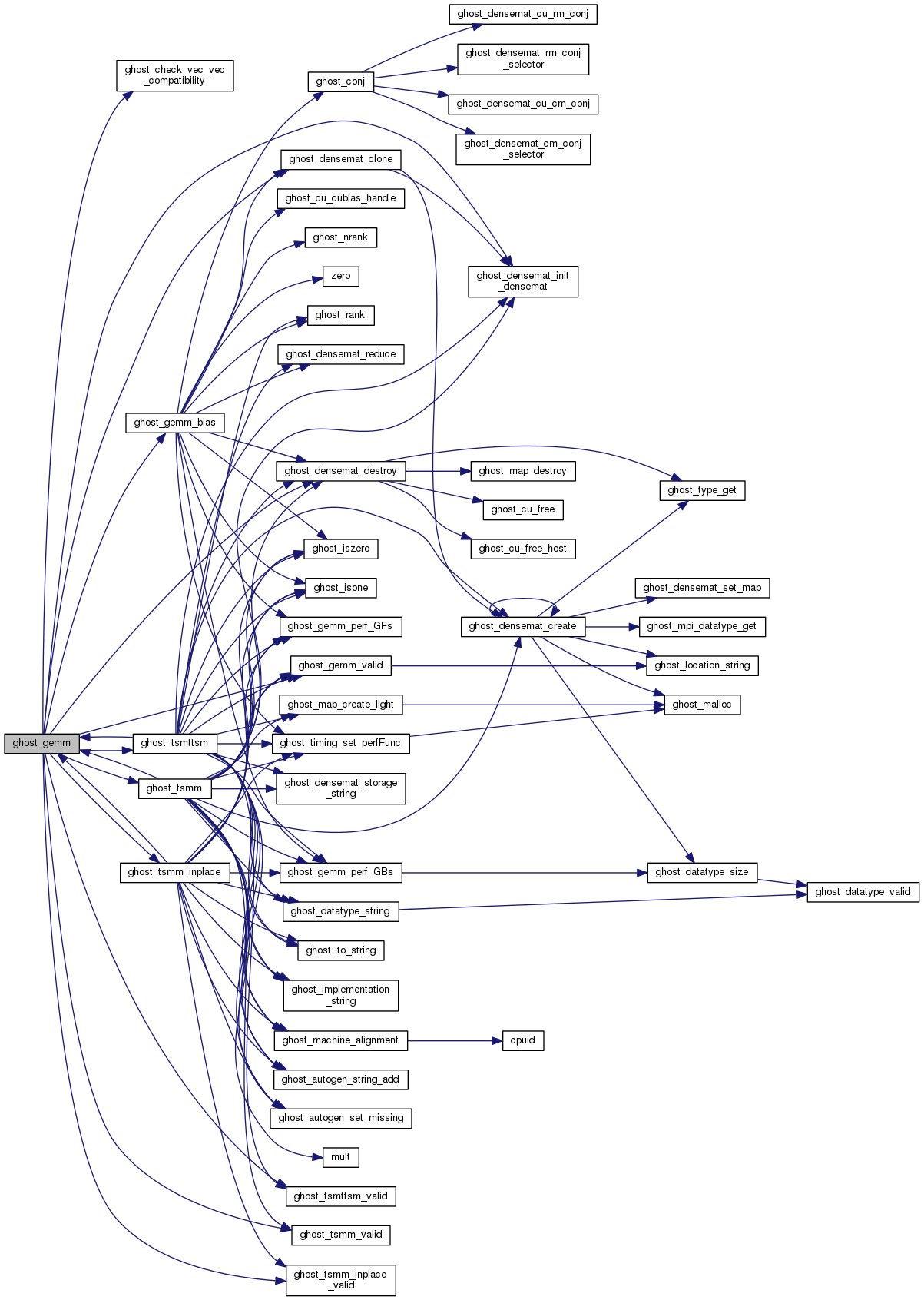

| ghost_error ghost_gemm | ( | ghost_densemat * | x, |

| ghost_densemat * | v, | ||

| const char * | transv, | ||

| ghost_densemat * | w, | ||

| const char * | transw, | ||

| void * | alpha, | ||

| void * | beta, | ||

| int | reduce, | ||

| ghost_gemm_flags | flags | ||

| ) |

Compute the general (dense) matrix-matrix product x = v*w.

| x | |

| v | |

| transv | |

| w | |

| transw | |

| alpha | |

| beta | |

| reduce | |

| flags |

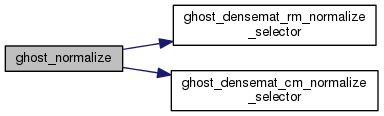

| ghost_error ghost_normalize | ( | ghost_densemat * | x | ) |

Normalizes a densemat (interpreted as a block vector).

| x | The densemat. |

This function normalizes every column of the matrix to have Euclidian norm 1.

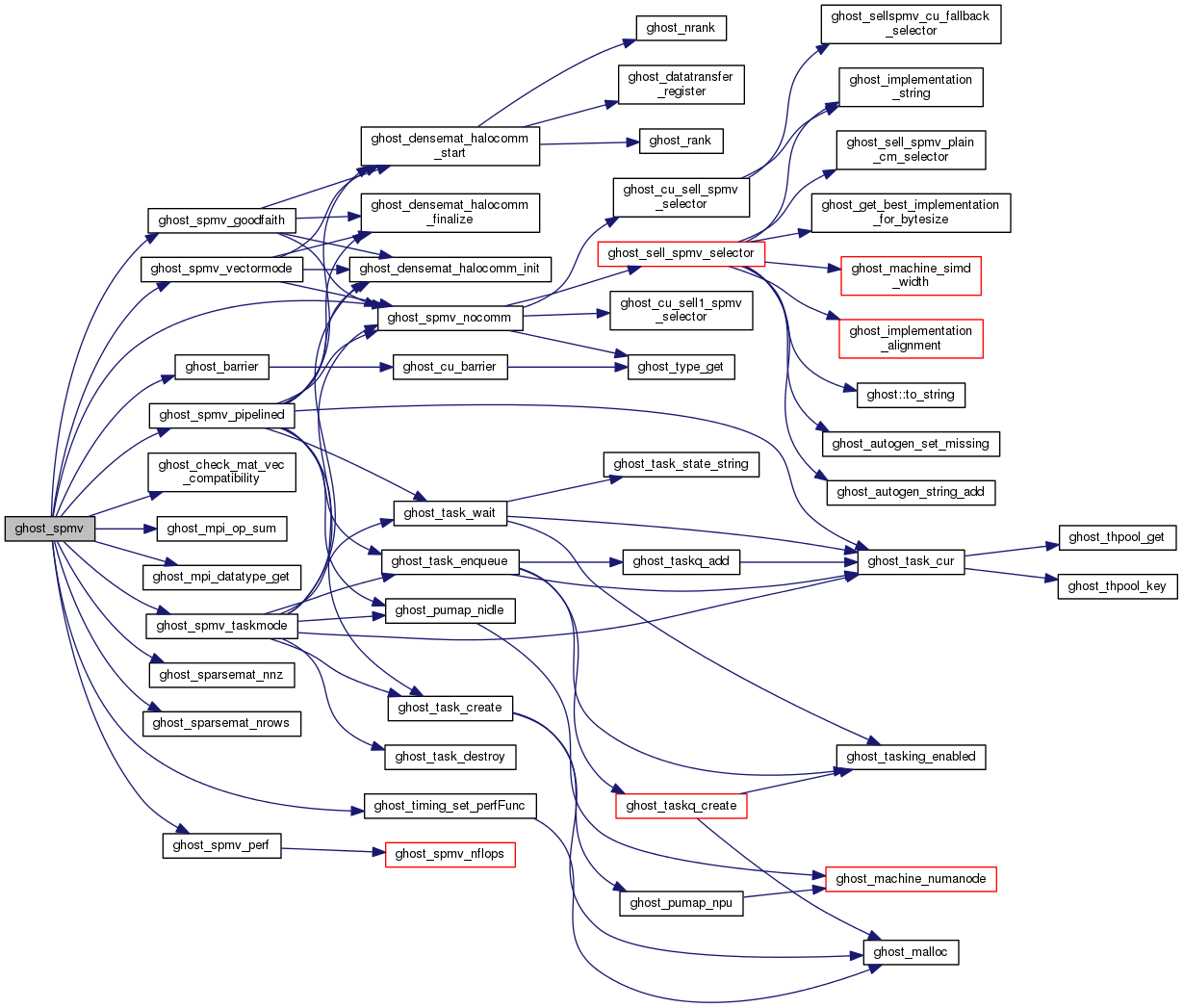

| ghost_error ghost_spmv | ( | ghost_densemat * | res, |

| ghost_sparsemat * | mat, | ||

| ghost_densemat * | invec, | ||

| ghost_spmv_opts | opts | ||

| ) |

Multiply a sparse matrix with a dense vector.

| res | The result vector. |

| mat | The sparse matrix. |

| invec | The right hand side vector. |

| opts | Configuration options for the spMV operation. |

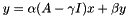

In the most general case, this function computes the operation  . If required by the operation,

. If required by the operation,  ,

,  ,

,  ,

,  ,

,  , dot, and z have to be given in the traits struct (z has to be pointer to a ghost_densemat, everyhing else are pointers to variables of the same type as the vector's data).

, dot, and z have to be given in the traits struct (z has to be pointer to a ghost_densemat, everyhing else are pointers to variables of the same type as the vector's data).

Application of the scaling factor  can be switched on by setting GHOST_SPMV_SCALE in the flags. Otherwise,

can be switched on by setting GHOST_SPMV_SCALE in the flags. Otherwise,  .

.

The scaling factor  can be enabled by setting GHOST_SPMV_AXPBY in the flags. The flag GHOST_SPMV_AXPY sets

can be enabled by setting GHOST_SPMV_AXPBY in the flags. The flag GHOST_SPMV_AXPY sets  to a fixed value of 1 which is a very common case.

to a fixed value of 1 which is a very common case.

will be evaluated if the flags contain GHOST_SPMV_SHIFT or GHOST_SPMV_VSHIFT.

will be evaluated if the flags contain GHOST_SPMV_SHIFT or GHOST_SPMV_VSHIFT.

In case GHOST_SPMV_DOT, GHOST_SPMV_DOT_YY, GHOST_SPMV_DOT_XY, or GHOST_SPMV_DOT_XX are set, dot has to point to a memory destination with the size (3 * "number of vector columns" * "sizeof(vector entry))". Column-wise dot products  will be computed and stored to this location.

will be computed and stored to this location.

This operation maybe changed with an additional AXPBY operation on the vector z:  If this should be done, GHOST_SPMV_CHAIN_AXPBY has to be set in the flags.

If this should be done, GHOST_SPMV_CHAIN_AXPBY has to be set in the flags.

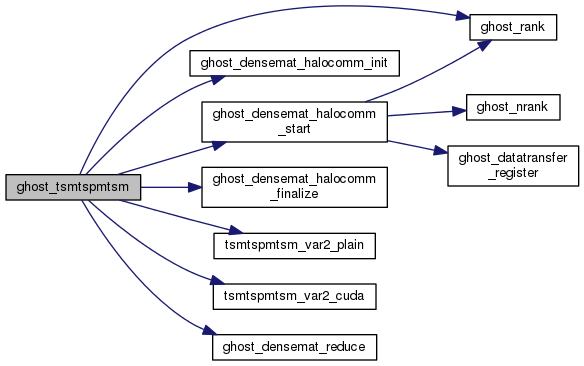

| ghost_error ghost_tsmtspmtsm | ( | ghost_densemat * | x, |

| ghost_densemat * | v, | ||

| ghost_densemat * | w, | ||

| ghost_sparsemat * | A, | ||

| void * | alpha, | ||

| void * | beta, | ||

| int | reduce | ||

| ) |

Multiply a transposed distributed dense tall skinny matrix with a sparse matrix and another distributed dense tall skinny matrix and Allreduce the result.

| [in,out] | x | |

| [in] | v | |

| [in] | w | |

| [in] | A | |

| [in] | alpha | |

| [in] | beta |

.

.

x is MxN, redundant.

v is KxM, distributed.

w is KxM, distributed.

A is a KxK sparse matrix

M,N << K

alpha and beta are pointers to values in host memory, that have the same data type as x

The data types of x, v, w and A have to be the same, except for the special case that v, w, and A are float, and x is double. In that case, all calculations are peformed in double precision.

This kernel is auto-generated at compile time for given values of M and N.

| ghost_error ghost_tsmttsm | ( | ghost_densemat * | x, |

| ghost_densemat * | v, | ||

| ghost_densemat * | w, | ||

| void * | alpha, | ||

| void * | beta, | ||

| int | reduce, | ||

| int | conjv, | ||

| ghost_gemm_flags | flags | ||

| ) |

Multiply a transposed distributed dense tall skinny matrix with another distributed dense tall skinny matrix and Allreduce the result.

| [in,out] | x | |

| [in] | v | |

| [in] | w | |

| [in] | alpha | |

| [in] | beta | |

| [in] | reduce | |

| [in] | context | In which context to do the reduction if one is specified. |

| [in] | conjv | If v should be conjugated, set this to != 1. |

| [in] | flags | Flags. Currently, they are only checked for GHOST_GEMM_KAHAN. |

.

.

v is NxM, distributed.

w is NxK, distributed.

x is NxK, redundant.

M<<N

This kernel is auto-generated at compile time for given values of K and M.

1.8.6

1.8.6